|

TIOVX User Guide

|

|

TIOVX User Guide

|

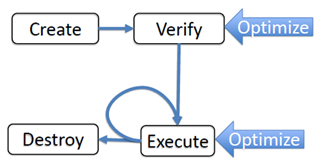

A given OpenVX graph consists of the states seen in the below diagram. These 4 states are described below:

At init time of an application using OpenVX, the API tivxInit is called upon each core that is being used within an OpenVX application. One of the purposes of the init API is to perform a static allocation of the handles used for OpenVX objects in a non-cached region of DDR. The framework enables logging of the actual amount of these statically allocated structures being used in an application in the event that these values need to be modified.

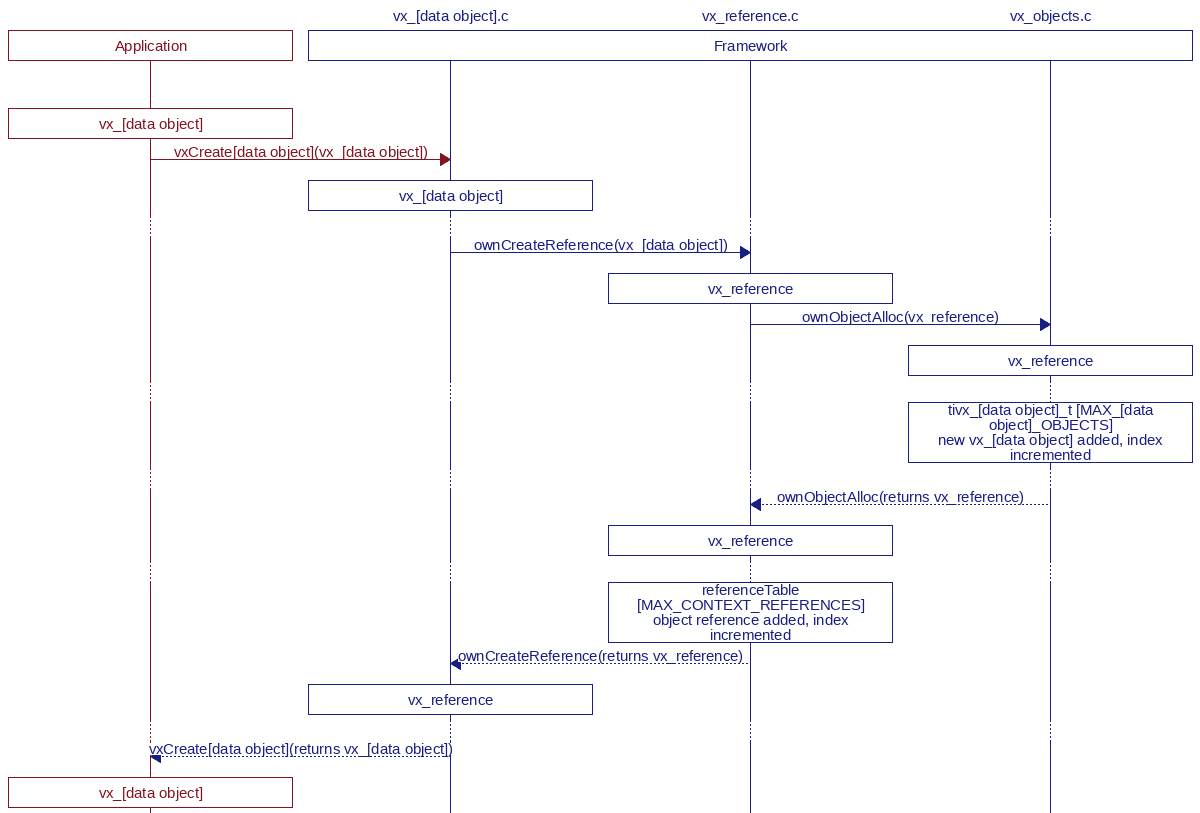

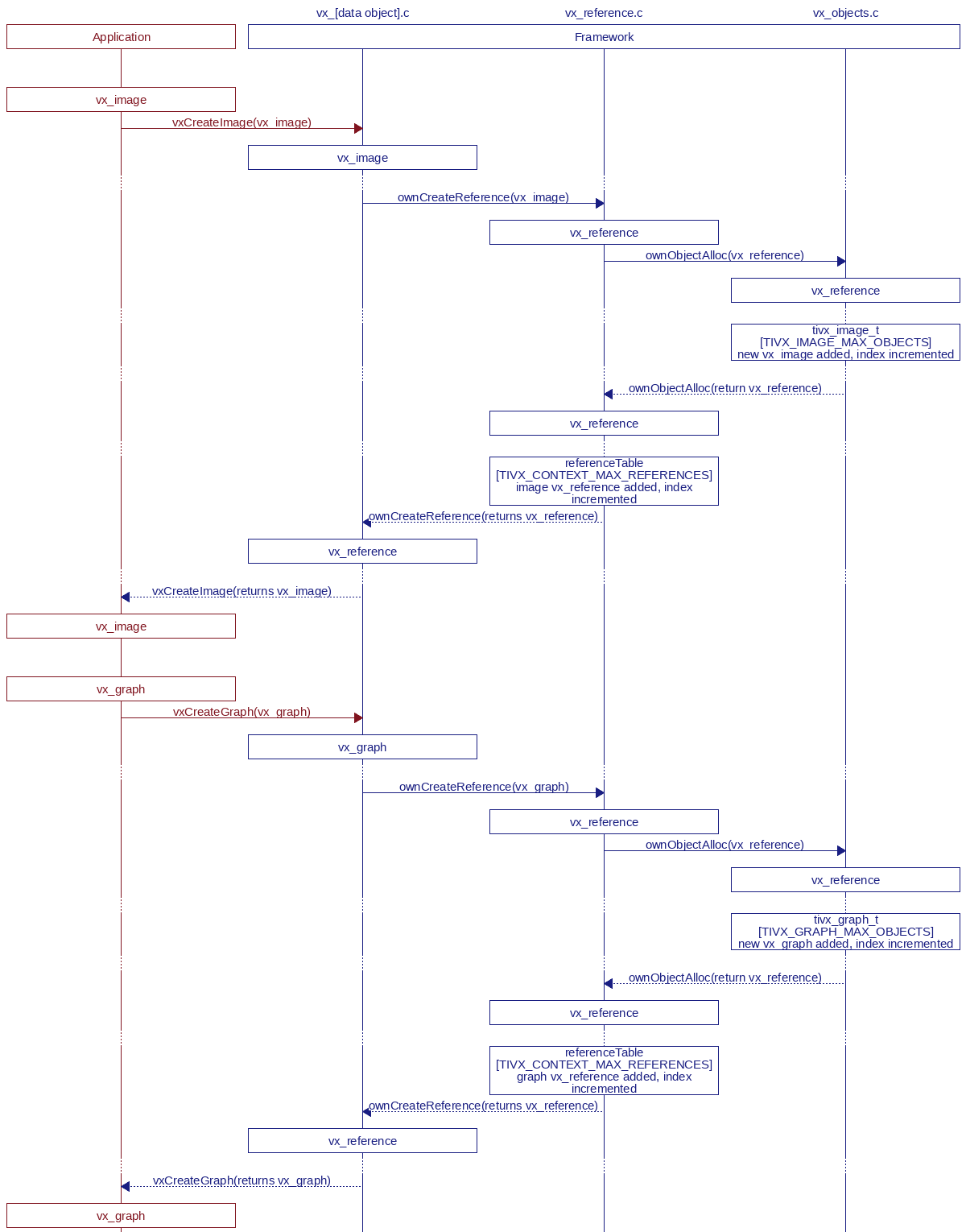

As mentioned previously, the "Create" phase calls the appropriate create API's for each of the OpenVX data objects within the application. With respect to memory management, the create API's do not allocate the memory needed for the data objects; the memory is allocated in the next phase, the verify phase. Instead, it simply returns an opaque handle to the OpenVX object. Therefore, the memory is not accessible directly within the application. These handles point to object descriptors referred to early that reside in a non-cached region of DDR as well as a set of cached attributes, including the OpenVX object data buffers.

This call sequence highlights the process from the application and framework perspectives when an object is created:

As an example, the following illustrates this procedure when creating image and graph objects:

In the event that the memory must be accessed within the application or initialized to a specific value, OpenVX provides certain standard API's for performing a map or copy of the data object. For example, the image object has the map and unmap API's vxMapImagePatch and vxUnmapImagePatch as well as a copy API, vxCopyImagePatch. In the event that the memory must be accessed prior to the verify phase, the framework will allocate the buffer(s) associated with the object within shared memory so that it can be accessed.

The allocation and mapping of the OpenVX data objects occurs during the verification phase. The memory for each of the data objects is allocated from a carved out region of DDR shared memory by using the tivxMemBufferAlloc API internally within the framework. This allocation is done on the host CPU. By performing this allocation within the vxVerifyGraph call, the framework can return an error at a single point within the application to notify the application that the graph is valid and can be scheduled.

In addition to allocating memory for the OpenVX data objects, the local memory needed for each kernel instance is also allocated during the call to vxVerifyGraph. In this context, local memory refers to memory regions accessible by the given core the target kernel is run on. This local memory allocation occurs as the vxVerifyGraph call loops through all the nodes within the graph and calls the "create" callbacks of the given nodes. The design intention of the framework is that all memory allocation occurs during the create callbacks of each kernel, thereby avoiding memory allocation at run-time inside the process callbacks. For more information about the callbacks for each kernel see vxAddUserKernel and tivxAddTargetKernel. For more information about the order in which each of these callbacks are called during vxVerifyGraph, see User Target Kernels.

Each kernel instance has its own context that allows the kernel instance to store context variables within a data structure using the API's tivxSetTargetKernelInstanceContext and tivxGetTargetKernelInstanceContext. Therefore, local memory can be allocated and stored within the kernel structure. The simple API's are provided to allocate kernel memory are tivxMemAlloc and tivxMemFree. These API's allow memory to be allocated from various memory regions given here tivx_mem_heap_region_e. For instance, if multiple algorithms are running consecutively within a kernel, intermediate data can be allocated within the kernel context. Also, within the tivx_mem_heap_region_e, there are attributes to create persistent memory within DDR or to create non-persistent, scratch memory within DDR.

During the scheduling and execution of the OpenVX graphs, the OpenVX data buffers reside in external shared memory and the pointers to each of these data buffers are passed along to subsequent nodes in the graph. Inside each node process callbacks, the node may access the external shared memory via the pointers that have been passed from the previous node. The tivxMemBufferMap and tivxMemBufferUnmap encapsulate the mapping and cache maintenance operations necessary for mapping the shared memory to the target core. After a node completes, the framework handles the trigerring of nodes depending on the data from the output of the current node.

As mentioned previously, the "Delete" phase calls the appropriate delete API's for each of the OpenVX data objects within the application. At this point, the data buffer(s) of the OpenVX data objects are freed from shared memory using the tivxMemBufferFree API. This freeing of memory occurs within the individual object's release API's, such as the vxReleaseImage for the image object.

As mentioned above, the default behavior of OpenVX data buffer transfer is to write intermediate buffers to DDR prior to these buffers being read by subsequent nodes. Below are a few recommendations and suggestions of how to optimize memory transfer: